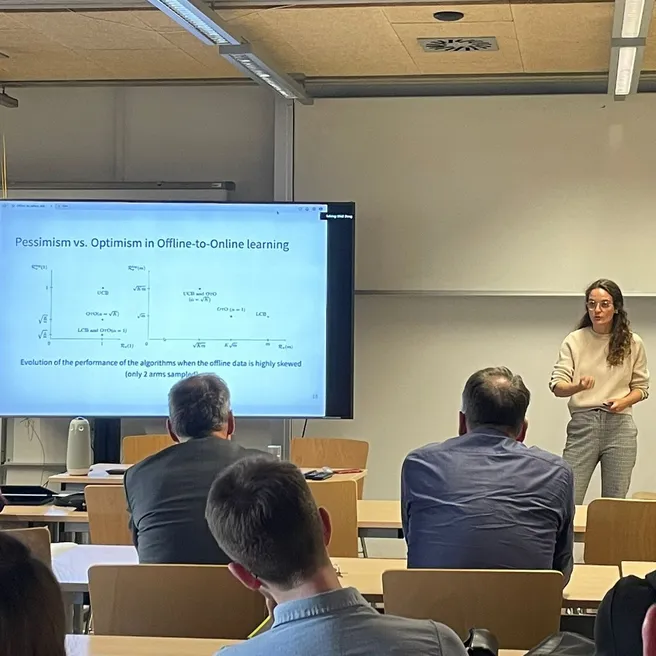

We introduce the offline-to-online learning framework through an application to Click-Through-Rate (CTR) prediction in online advertising. A decision-maker starts with a fixed dataset of prior interactions collected without their control and uses it to iteratively refine their strategy, maximizing cumulative rewards. A key challenge arises: for short horizons, the pessimistic Lower Confidence Bound (LCB) algorithm performs well, competing with strategies supported by the offline data. For longer horizons, the optimistic Upper Confidence Bound (UCB) algorithm is preferred, as it converges to the optimal strategy at a near-optimal rate. However, UCB initially over-explores, leading to worse short-term performance than LCB. This creates a strategic dilemma: a decision-maker uncertain about the deployment duration should start with LCB and gradually transition to UCB as more interactions occur. We explore how and why this transition should happen. Our main result shows that our proposed algorithm performs nearly as well as the better of LCB and UCB at any point in time.

Based on a paper with Ilbin Lee and Csaba Szeppesvari.